~ 5 min read

Will You Accept These GPT 4o Secure Coding Recommendations?

Surely, AI is all the hype these last couple of years. Everyone are chatting about the latest LLM models like GPT 4o, Gemini, Claude 3.5 and others. I’m pretty confident developers are saturated within the same mindset that LLMs are a hammer and everything else in software development is a nail.

So, no surprise, developers would like to use Large Language Models (LLMs) to fix security issues found in the code. How realistic is it? Can we trust these generative text models to provide secure coding recommendations? Let’s see!

Can LLM Secure a Path Traversal Vulnerability?

For this exercise, I used a vulnerable Node.js application that I’ve built and has a deliberate path traversal vulnerability.

The Node.js API has a service that handles Bank Statements, as follows:

import { readFile } from "fs/promises";import { setTimeout } from "timers/promises";import path from "path";

const ASSETS_DIRECTORY = "../assets/statements";

async RetrieveAccountStatementPDF(filename: string) { filename = decodeURIComponent(filename); filename = path.normalize(filename);

const filePath = path.join(process.cwd(), ASSETS_DIRECTORY, filename);

const fileContents = await readFile(filePath);

return fileContents; }This probably already hints the insecure code issue related to the filename variable that is received from user input and then passed to the path.join function to read the PDF statement file from disk.

So, if we told an LLM about it maybe it can help us fix the security issue at hand.

👋 Just a quick break

I'm Liran Tal and I'm the author of the newest series of expert Node.js Secure Coding books. Check it out and level up your JavaScript

GPT 4o Insecure Recommendation #1

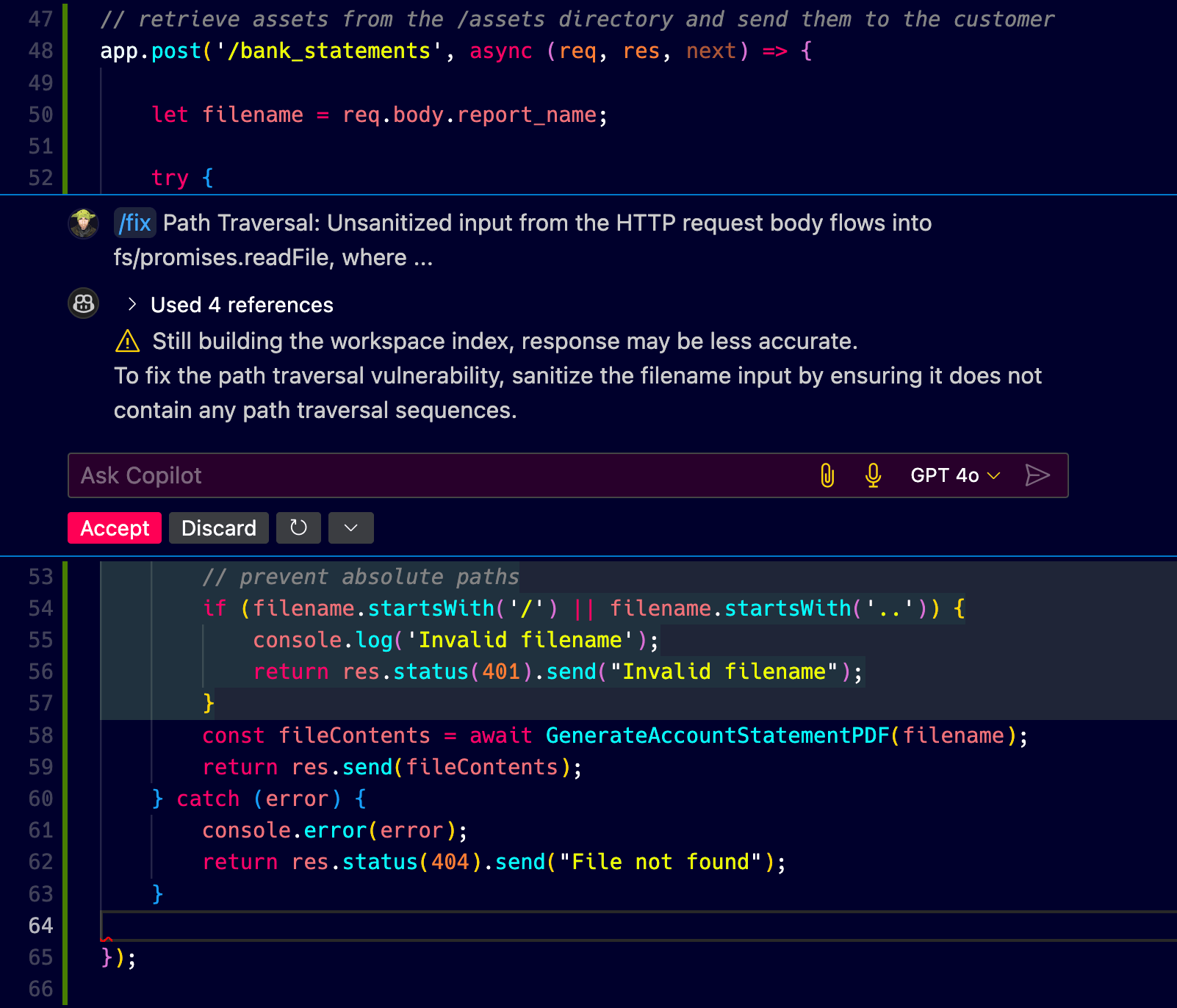

I’m using VS Code with the GitHub Copilot extension enabled and my chosen model is the default gpt-4o model which is quite popular and the default that GitHub has put out there.

Then, I use the Snyk VS Code extension to find secure coding issues. You can see how I used the Snyk warning about the path traversal vulnerability in the screenshot below as part of my prompt instructions to GitHub Copilot to ask it to fix the issue:

So the secure coding convention to follow is this:

if (filename.startsWith('/') || filename.startsWith('..')) { console. log( 'Invalid filename'); return res. status (401). send( "Invalid filename");}Let’s think about it for a second.

If my filename, originating from user input, starts with an absolute path denoted by a forward slash such as /etc/passwd then this code catches it. Great. If my filename starts with .. then it also catches it too. For example, the common attack payload of ../../../etc/passwd would be caught by this code recommendation from GPT 4o. Nice job.

Looks like we can accept the code change from Copilot and push to production?

Not so fast!

What if the attacker’s payload is… ./../../../etc/passwd? This code change won’t catch it. Oh no! 😯

Epic fail! 😞

GPT 4o Insecure Recommendation #2

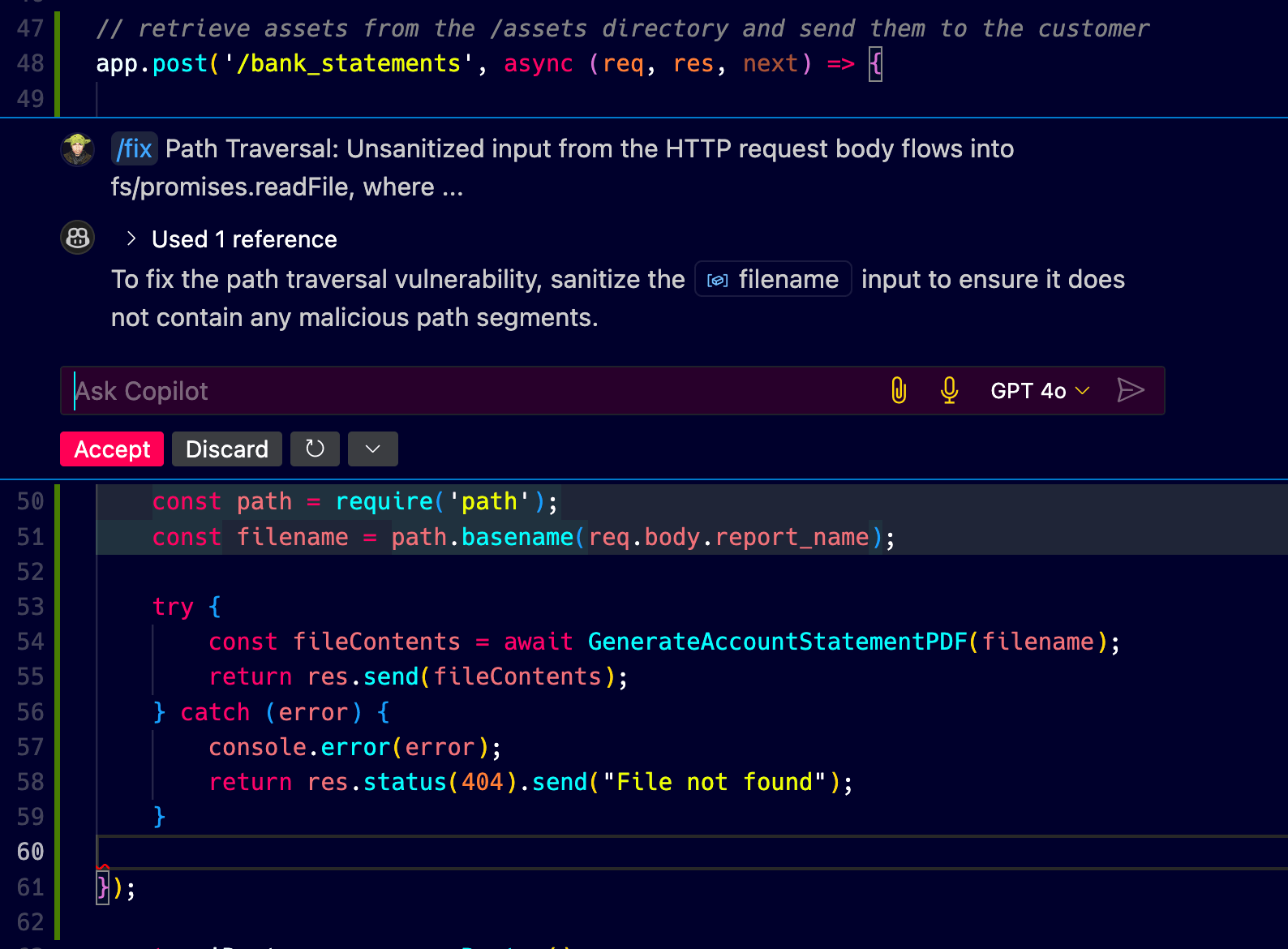

Ok, check out this next recommendation from GitHub Copilot:

Let’s reason about this for a bit.

First off, this part of the code is the route handler. What’s also known as the controller and this is where Snyk detected the source (in the sense of source-to-sink) of the path traversal vulnerability. The filename variable input is the source of the vulnerability. It later flows somewhere in the application (perhaps in the service I shared earlier in the article).

So the code change is essentially the follows:

const path = require( 'path');const filename = path. basename(req.body.report_name);

try { const fileContents = await GenerateAccountStatementPDF( filename); // ...It bases its security fix on the path.basename function which is a Node.js built-in function that returns the last portion of a path. So, if the filename is ../../../etc/passwd then path.basename will return passwd and the path traversal vulnerability is mitigated. Or… is it?

Well, this depends. Scroll up to the service logic I shared and tell me if you can spot the issue with this code change. Then scroll back here.

👋 Just a quick break

I'm Liran Tal and I'm the author of the newest series of expert Node.js Secure Coding books. Check it out and level up your JavaScript

What if the attacker provided the filename payload as follows:

%2e%2e%2f%2e%2e%2f%2e%2e%2fetc%2fpasswdThe logic in the service for performing the bank statements work makes use of a decodeURIComponent function which decodes the URL-encoded payload. So, that means that the basename() would return %2e%2e%2f%2e%2e%2f%2e%2e%2fetc%2fpasswd but if we pass that over to the decodeURIComponent function then the payload above would be decoded to ../../../etc/passwd. There we go, that’s the base path.

From that point in the code logic, the path normalization and then the path join functions would be called in order to read the file, and result in a successful path traversal vulnerability.

So, attempt #2 from GPT 4o is also a no-go. Another epic fail! 😞

p.s. Interesting to reason about the wider picture here. The LLM doesn’t have the full context of a code flow and so it can’t properly do a source-to-sink analysis to tell if and how the codebase is impacted by a change such as this.

Conclusion

Could an LLM come up with good secure coding practices? Maybe. I’m sure there are good examples. But, it’s not a security tool, it’s a text generation tool (hence the name, generative ai, yes?).

Perhaps in the future we’ll find more security fine-tuned models that can help developers overcoming security issues and vulnerability fatigue problems but we’re not there yet and relying on LLMs to find or to fix security issues at this point in time is simply not sufficient. We’re not there yet.